Quality: carbon projects are method actors

Here are some key takeaways:

Standards Bodies create methodologies to determine the greenhouse gas benefits of various types of carbon projects, and inform when and how many credits projects can issue.

Assessing the quality of a carbon credit project therefore begins with understanding the methodology it uses, and how it is applied.

Carbon ratings provide a toolkit for carbon market participants to dig into these differences at a project, sector and market level.

Contents

- Introduction

- Differences between Standards Bodies

- Methodologies in isolation do not drive credit quality

- Case studies of methodologies in action

- Sector expertise may vary from one Standards Body to another

- Conclusions

Please note that the content of this insight may contain references to our previous rating scale and associated rating definitions. You can find details of our updated rating scale, effective since March 13th 2023, here.

Introduction

Much of the focus in the Voluntary Carbon Market (VCM) right now is on the type of evidence accepted by Standards Bodies, such as Verra, Gold Standard, Plan Vivo etc, to support a carbon project’s baseline assumption, and the resultant risk of over-crediting.

We have seen a flurry of articles referencing independent or academic analysis pointing to evidence of pristine forests or aggressive deforestation that seems to directly contradict the trend claimed by an issuing carbon project. While this isn’t anything new for those that work in the VCM, it is easy to understand why such pieces cause many to question its credibility.

A fundamental way to better understand whether seemingly “new” evidence is or isn’t useful in assessing carbon credit quality is to delve into the methodology the project adheres to.

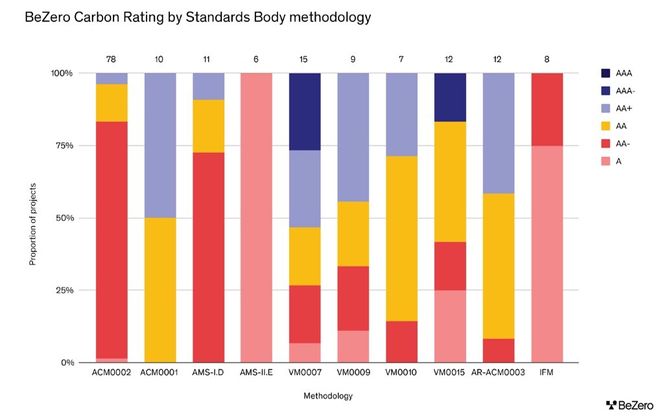

After all, the type of data and evidence a project is or isn’t allowed to submit for its carbon claims are set out in the methodology that project uses. For context, at BeZero, we have assessed nearly 300 carbon projects using more than 70 different methodologies and observe a distribution of ratings within each methodology contingent on how it is applied (Chart 1)

Chart 1. Proportion of BeZero Carbon Ratings by Standards Body methodology for each of the ten methodologies most frequently used by the rated projects.

Methodologies template the parameters required for a project to issue credits by setting system boundaries, determining crediting periods, providing tools to assess additionality, and outlining methods to identify and revise project baselines and all procedures to enable quantification of GHG benefits.

These technical documents provide project developers and third party verification bodies with tools to estimate and quantify greenhouse gas (GHG) removals or reductions.

They are what Standards Bodies rely on for accreditation, i.e. to judge whether the project is avoiding or removing a tonne of carbon dioxide equivalent and should therefore be allowed to issue and sell credits for retirement.

Assessing this process is a key input into BeZero’s Carbon Ratings, and provides a useful reminder that iterating and improving these processes holds one of the keys to improving standards and quality in the VCM.

Differences between Standards Bodies

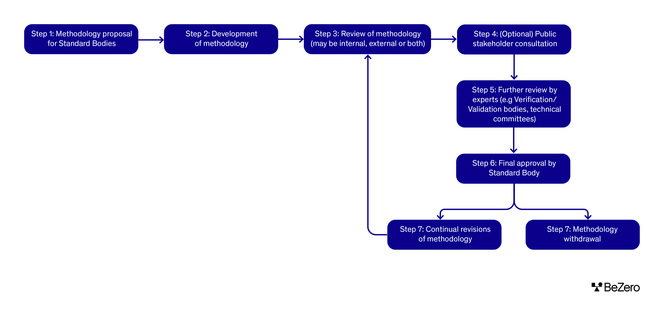

Standards Bodies differ in how their methodologies come into being; the transparency of underlying review processes; the use of external stakeholders to refine them (ignoring the variations in implementation they allow). But a typical lifecycle is shown in Chart 2.

Chart 2. An outline of the lifecycle of methodology development within the Voluntary Carbon Market.

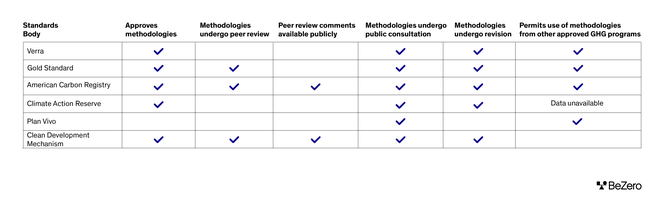

Depending on the Standards Body, Table 1 shows that the process of methodology development can vary, but tends to include reviews (both internal or via a peer process) and public consultations.

Table 1. Differences in methodology development across the various Standards Bodies according to publicly disclosed information. The peer review process here refers to external, invited reviews.

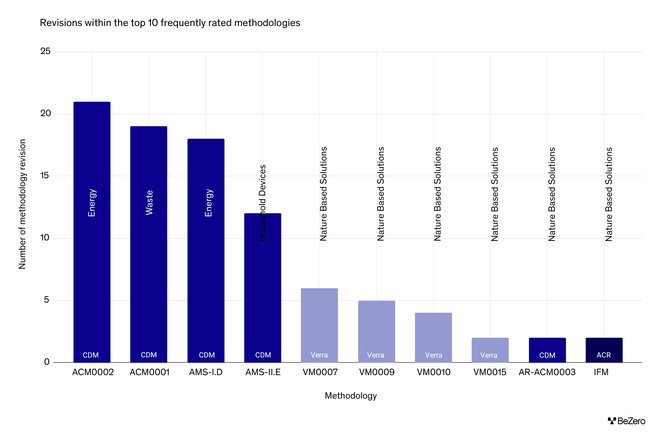

Further, following the establishment of a methodology, there can be revisions to support the continual update of these to match recent scientific developments or withdrawals. For example, for the methodologies that most frequently arise in BeZero's assessment of associated carbon credits, we find there can be over twenty revisions.

When considering the top ten most revised methodologies BeZero assesses, our team observes that most revisions occur within the sector groups of Energy and Nature Based Solutions, and specifically for methodologies within the Renewables and Avoided Deforestation sub-sectors. This makes sense given they are amongst the oldest project types.

These revisions support inclusions, such as new types of technologies (e.g. geothermal within renewables), biomass equations, and the addition of new carbon pools covered.

Chart 3. The number of revisions for each of the ten methodologies most frequently rated by BeZero Carbon. The colours correspond to the Standards Bodies.

Methodologies in isolation do not drive credit quality

The big question to ask is whether the methodology reviews and revisions are improving standards - our analysis suggests this isn’t clear cut. We find the number or type of review supporting methodology development in itself does not necessarily improve the quality of credits generated.

One big reason for this is that today’s prevalent methodologies seem designed to be deliberately general. This allows them to be relevant and applicable to a larger number of project types within a theme - independent of scale, location and data availability.

It’s only logical that the wider the applicability of a method and the more diverse the approaches a project is allowed to take, the more variations in outcomes will be observable. Ensuring they all are precisely equivalent to a tonne of avoided or removed carbon is essentially impossible.

Case studies of methodologies in action

Methodologies are such a vital part of the current baselines, and overall quality, debate. To see why in action, let’s look at two baseline scenario examples.

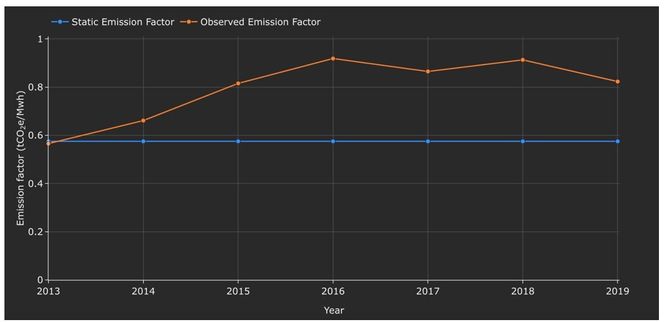

One of the most frequently assessed and reviewed methodologies for avoidance projects is ‘ACM0002’, which is commonly used by the renewables sub-sector. This methodology allows projects to choose from two different options to determine the baseline grid emission factor. Projects may either determine a grid emission factor prior to the start of their activities (ex ante) and apply this statically for the course of their crediting period, or alternatively, update the baseline emission factor annually to reflect the dynamic changes in fossil fuel intensity experienced by the grid.

Chart 4. An example of a carbon project using ACM0002 with a conservative static baseline across its various issued vintages.

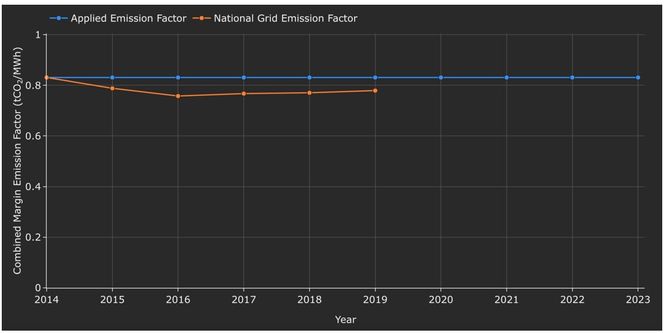

Charts 4 and 5 show two different renewables projects in different countries accredited by different Standards Bodies, but both using ACM0002. They highlight the differences in baseline construction. For Chart 4, the static baseline is below the observed grid emission factor. For Chart 5, the static baseline is above.

Using BeZero’s language of risk, we find the credits issued by the first project face lower over-crediting risk because the static baseline emissions factor is far lower than the observed grid emission factor. The converse is true, albeit not excessively so, for the second project.

Chart 5. An example of a carbon project using ACM0002 with an outdated static baseline across its various issued vintages. Post-2019, no data are publicly available for the observed regional grid emissions factor.

This comparison demonstrates that it’s not necessarily the methodology in isolation that drives credit quality, but how a project applies it.

The obvious next question is whether Standards Bodies should allow two applications to qualify as approved baseline approaches. Whatever the answer, this highlights the positive feedback loop carbon ratings can have with methodological reviews, i.e. adding another layer of analysis to the market and helping qualify the impact of and quality of project delivery different paths chosen by projects.

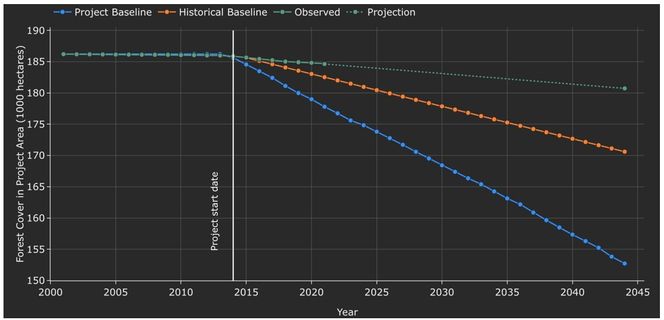

For the second case study, we look at the more complex example of an avoided deforestation project accredited under methodology VM0015, a methodology used by over 30 registered projects in this sub-sector. Projects using this methodology can choose between using historical averages or modelling approaches (variously defined) to calculate their baseline.

Chart 6. An example of a carbon project using VCM0015 with an aggressive baseline. The chart shows the baseline change in forest cover, compared with an alternative baseline (using the historical average deforestation rate in the project’s reference region), and observed/projected changes in the project area.

For this example project, the proponent estimates baseline future deforestation using a simulation model that propagates the rate and spatial pattern of deforestation through time, on a pixel-by-pixel basis, unconstrained by the historical average deforestation rate.

We find that this approach may lead to an over-estimated baseline, because of inherent uncertainties in the many assumptions adopted, and the lack of independent testing and validation of the model.

As Chart 6 shows, the alternative, of adopting a historical average approach to set the baseline deforestation assumptions, implies a markedly different scenario involving substantially less assumed deforestation. While both are allowed under the methodology, the market needs a tool like ratings to help distinguish between the risks, such as over-crediting, associated with them, on a project by project basis.

This has wider implications for thinking about how Standards Bodies consider, revise and review baselines too.

There is much debate about how best to use geospatial modelling to assess crucial components of carbon accounting, such as project baselines, leakage, non-permanence risk, and changes in carbon stock densities. And there’s better and worse implantations of each of these (see our recent client newsletter for an update on our approach).

What we highlight here is even where it appears that the project uses apparently sophisticated geospatial techniques, this does not always ensure a higher quality outcome. Project context is king in order to arrive at a sensible and reasonable conclusion.

It also suggests there is a huge education curve to take the VCM on so market participants can tell the difference.

Sector expertise may vary from one Standards Body to another

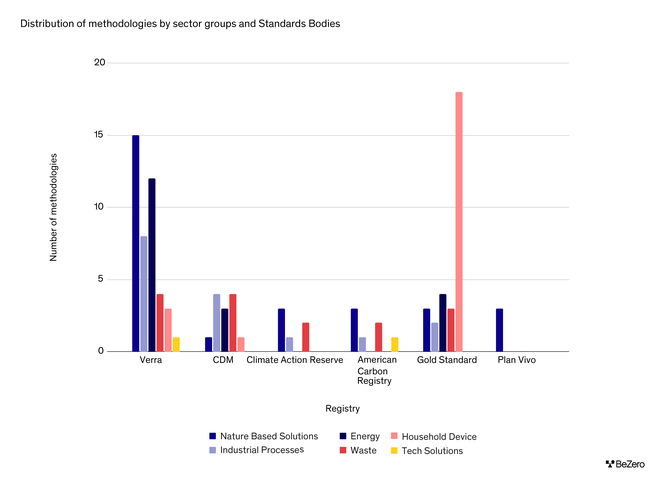

Another point of differentiation we observe, is that Standards Bodies have often tended to specialise in methodology development in different sectors.

We are often asked about whether certain Standards Bodies are better than others. However, we believe it is too simplistic to make such judgements for a couple of reasons:

The relative strengths and weaknesses of a methodology are of course important. However, credit quality ultimately depends as much on how a methodology is applied as the methodology or Standards Body per se (see above). This means that credit quality requires bespoke, project specific assessments on how it adheres to and deviates from methodologies, how methodologies and associated tools are caveated in their use, and what supporting data specific to the locale a project makes use of.

Standards Bodies can focus on different sector groups rather than trying to accredit projects across the market, which means as entities they may not be themselves comparable on a like-for-like basis. For example, for projects that we have rated, we observe that Standards Bodies such as Verra and Plan Vivo have focal points around Nature Based Solutions whilst Gold Standard centres quite distinctly around Household Devices (Chart 7). Again, this is why a risk-based ratings methodology, like BeZero’s, which is applicable to the entire VCM rather than one individual sector is so valuable.

Chart 7. The distribution of methodologies across sector groups and Standards Bodies for projects rated by BeZero Carbon.

Rather than picking a winner, one area there is consensus on, and that would unquestionably help the VCM, is for Standards Bodies to scale their in-house capabilities to meet rising supply-side pressures. The doubling in capital trying to invest in carbon projects last year needs a requisite capacity build, to keep up with demand while harmonising and improving methodological rigour.

We also think competition between Standards Bodies is positive so long as incumbents and contenders enforce comparable transparency and disclosure requirements.

Conclusions

The VCM is only as credible as the various methodologies or protocols it leverages to determine the GHG benefits associated with carbon credits. Standards Bodies prescribe methodologies for different sectors, and stipulate the various parameters necessary for a project to be verified for issuing credits.

The VCM has intentionally opted for methodologies to be designed broadly. This makes a lot of sense if the objective is to encourage developers and investors to participate more in the VCM, and drive innovation. But it also makes total equivalence of outcome difficult to guarantee.

Much focus has been on how to incorporate a range of mainstream scientific developments and make them common practice in the VCM via updating methodologies. The latest push to the use of statistical matching and dynamic baselines is an example of this process in good effect.

Yet, BeZero’s analysis finds that the cadence of methodology reviews does not alone dictate the quality of the carbon credits produced. Some approaches can have better outcomes than others and some modern techniques, while highly sophisticated, may not guarantee accuracy. Sense and sensibility still play an essential role.

Our analysis also warns against assuming a methodology is in and of itself enough to determine quality. The way they are implemented by individual projects varies substantially, and as a result so too does carbon efficacy of issued credits. Some schemes, such as CORSIA, rely on this step alone for instance. Note Article 6 presents an interesting path towards seeking agreement to impose fewer, more specific methodologies.

Finally, the diverse manner in which methodologies are carried out underlines the need for independent carbon ratings. Developing and assessing projects is highly complex and there’s still so much to learn, often in real time.

By taking a probabilistic approach to project assessment (not binary pass or fail), ratings ensure that the degree to which a project employs appropriate methodological considerations is fairly reflected. It also bridges the gap between seeing quality as binary, and the realities on the ground.

As ratings processes and insights mature, they can provide a valuable feedback loop for the VCM value chain, including Standards Bodies on approaches the wider market considers higher quality.